AMD Xilinx, an industry-leading supplier of semiconductors and audiovisual (AV) connectivity devices, continues to leverage its Zynq UltraScale+ multi-processor system-on-a-chip (MPSoC) to empower multimedia streamers with top-rated support.

The pro-AV and broadcasting systems from AMD Xilinx have become widely used among companies seeking next-generation extended reality (XR) solutions as they provide unmatched performance, customisation, low-latency multichannel pipelines, and massive integrative features along with support for multiple open-source applications.

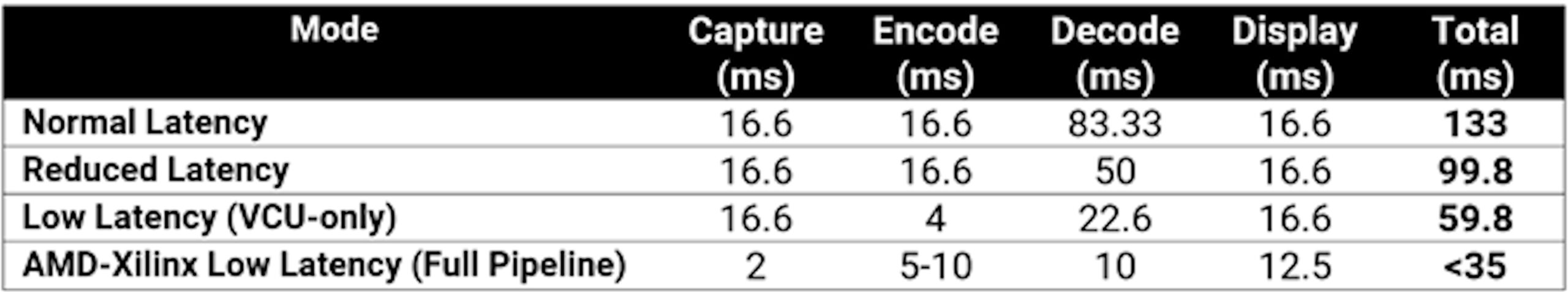

The Zynq UltraScale+ solution offers an integrated 4K, 60fps video codec unit (VCU) with a 10-bit H.264/H.265 video codec unit to compress and decompress video with minimum latency.

AMD Xilinx VCU latency modes. PHOTO: AMD XilinxThe system’s Arm processor subsystem (PS) features multicore functionality for operating systems, drivers, and high-performance peripheral devices, the company explained.

The Zynq UltraScale+ MPSoC’s targeted reference design (TRD) provides individual intellectual property and system infrastructure blocks for fully-validated design modules to accommodate widely-used use cases in the AV market, allowing firms to encode, decode, capture, and display pipelines across connectivity, double-data rate (DDR), and multiple video formats for optimal versatility.

Introducing the VPK120 Evaluation Kit, featuring the #Versal Premium VP1202 device, built for network and cloud applications requiring massive serial bandwidth, security, and compute density. https://t.co/ieP3dBdonj pic.twitter.com/RDxwUYHZTs

— AMD Xilinx (@XilinxInc) August 24, 2022

The system’s ZCU106 Evaluation Kit also offers pre-built images and provides validated IP cores, source, project files, drivers, and build flows, among others, to fine-tine VCU performance parameters for numerous use cases.

The acclaimed solution from AMD Xilinx also leverages its Vivado IP Integrator (IPI) and PetaLinux tools for designing hardware and software, respectively.

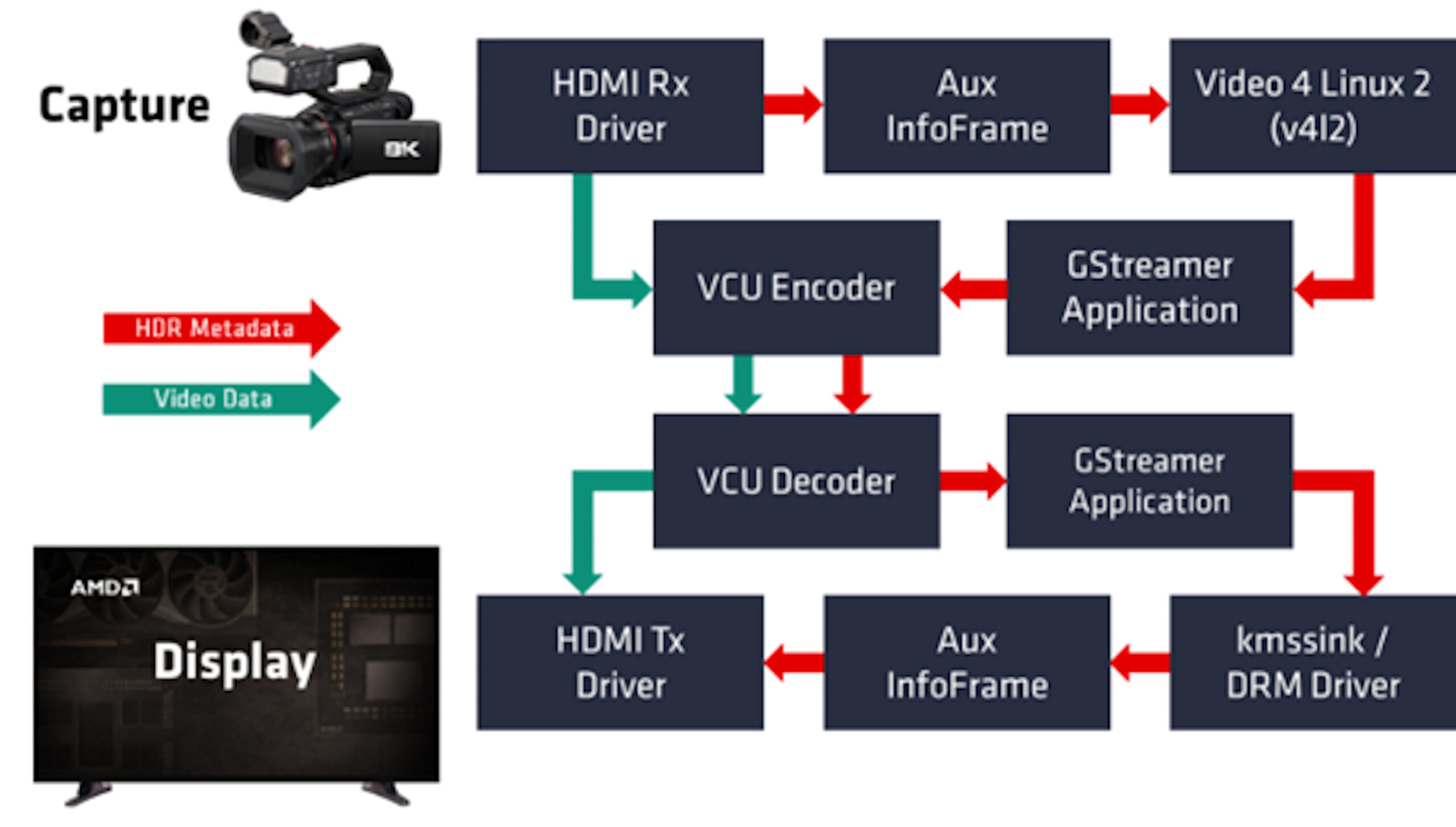

Using the GStreamer open-source multimedia framework, the platform also provides operators with audio, video, and data elements converted to streamlined, flexible multimedia pipelines running on a minimal number of GStreamer commands.

It also allows open-source GStreamer plugins to integrate and boost interaction between AV design components and the TRD.

The platform comes after major efforts from AMD Xilinx to build novel hardware and software systems for the broadcast, pro-AV, and related markets.

AMD Xilinx Engages Pro-AV, Stereoscopic Filmmaking

The news comes after AMD released several key updates for its portfolio of pro-AV and broadcasting solutions, including its Versal AI Core series of processors.

Canon, one of the world’s largest camera companies, selected the chipmaker for its immersive Free Viewpoint Video System to transform live sports broadcasts and webcasts, allowing audiences to view footage from ubiquitous angles of a venue.

XR Today also spoke at the Integrated Systems Europe (ISE 2022) to Ramesh Iyer, Senior Director of Pro Audiovisual (AV), Broadcast, and Consumer for AMD, who explained how his company’s adaptive computer engines provided bespoke processors for XR broadcasters and companies.

Using the versatile system, firms could include “different graphics layers and different video content to the chair, mixing overlays in real-time, at a very low power envelope, and show that to TV audiences,” Iyer said at the time.