What is EMG, and what does it have to do with the extended reality landscape?

EMG, or “Electromyography,” isn’t something most people would usually associate with augmented, virtual, and mixed reality experiences. At a basic level, EMG is a diagnostic procedure used in the medical world to evaluate the health condition of nerve cells and muscles.

However, EMG technology doesn’t just have applications in the diagnostic landscape. At its core, electromyography technology allows for digital data collection about a person’s muscle movements and nerve control.

Because of this, solutions like “EMG” input could form an essential part of the next era of spatial computing in the extended reality world.

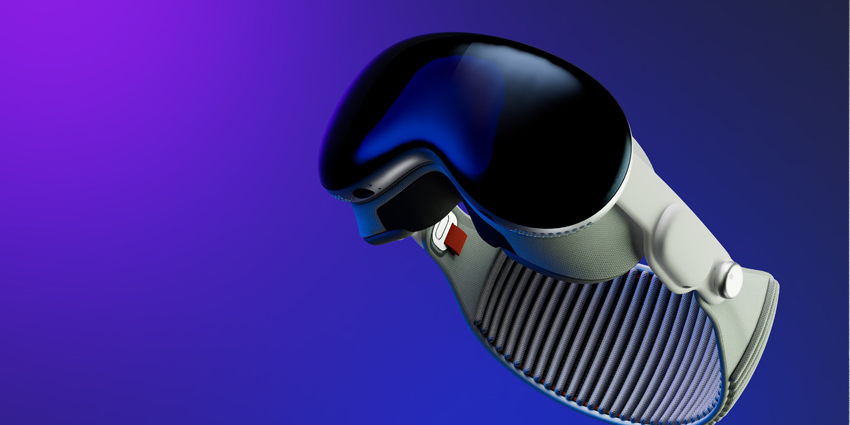

If the next generation of XR headsets, from smart glasses to mixed reality tools like the Apple Vision Pro, can track muscle and nerve input, they can offer a new user interface. These tools could empower us to interact with machines more “naturally” than ever before.

What is EMG? An Introduction to Electromyography

Let’s start with the basics: what is EMG, exactly?

Electromyography, or EMG, is a diagnostic procedure used to evaluate the health of nerve cells and muscles. EMG tools track and monitor motor neurons, examining the electrical signals that cause muscles to contract or relax.

An EMG machine can translate those electrical signals into data. In the medical world, this data is usually presented as graphs, and numbers of doctors can use to make a diagnosis. In the XR world, this data could be translated into user interface instructions.

The answer to “what is an EMG” in the medical world can seem daunting. Usually, it involves tests that place small needles into the skin, through to the muscle. Electrical activity is then displayed on an oscilloscope and amplified.

However, in the XR world, headset manufacturers probably won’t try to sell products with such an invasive user interface. It’s hard to imagine any company earning massive sales from a headset equipped with needles and electrodes.

What is EMG Technology in Extended Reality?

So, what is EMG in extended reality?

In the XR world, EMG technology is based on the same concepts used in the medical environment. Using EMG hardware and sensors, companies can detect and record electrical activity from muscles. This activity can then be converted into input for XR wearables and software.

On a basic level, EMG solutions could help developers conduct proper research into new ways of enhancing user experience.

EMG technology could also allow for the development of non-intrusive, wearable technologies designed to bring haptics into the XR world.

AR and EMG-ready immersive devices enable human-computer interaction (HCI) that negates traditional input methods like a mouse or keyboard. The EMG device user doesn’t need to move their wrists, hands, or limbs.

Instead, users can use their thoughts to send an electrical signal to the limb. EMG-ready devices detect this and transform it into a computer signal covering navigation and input.

Authors from the Chinese University of Hong Kong and New York University even published a study on the potential for EMG technology in XR. Their research involved using EMG sensors and force-sensitive pressure pads to create a neural interface for the virtual world.

The researchers showed that the EMG sensors, placed on a subject’s forearm, could help XR systems decode user movements more effectively in real-time. What’s more, the technology required virtually no calibration to set up.

Why is Electromyography Beneficial to XR?

Bringing medical technology into the extended reality space is nothing new. Companies have used heart sensors, biometric scanners, and monitors for years to improve user interactions. Primarily, using EMG solutions in XR paves the way for an enhanced spatial computing experience, eliminating the need for traditional input with mice and keyboards.

Introducing a sense of physicality and immersion into XR has always been a core focus for developers. Although teams have accomplished significant advancements with eye-tracking and motion-sensing tools, a gap remains.

Closing the loop between the “human” and the “user interface” requires a new focus on bringing spatial capabilities into the XR experience. The more information a computer can gather from a person’s movements, actions, or nerve responses, the better it can respond.

EMG technology provides XR solutions with the insights they need to rework the user interface. Already, various applications for this technology have emerged.

For example, ongoing research proposes that people with cerebral palsy may be able to use an EMG-enabled interface to coordinate movements and recreate muscular control in the virtual world.

How Meta is Using EMG Technology in AR

Though using EMG and similar technologies in XR might seem like a sci-fi concept, initiatives are already in motion. For instance, in the augmented reality marketplace, the smart glasses ecosystem isn’t growing as quickly as it could be.

While these tools can offer exceptional benefits, particularly for frontline workers and manufacturers, they lack the engaging user interface required for a streamlined experience.

The answer could be to investigate new ways of bringing “human input” into the XR user interface.

With the debut of Nreal and Ray-Ban devices, consumers are encountering smart glasses that contain increasingly sophisticated components and software. Estimations predict consumer-grade AR smart glasses vendors will ship roughly 14.19 million units in 2022.

Vendors like Meta are taking advantage of this opportunity for growth with deep investments in user interface R&D. The Meta brand has invested millions into various forms of neural biofeedback technology, including EMG systems, to transform the AR glasses industry.

What is EMG Doing for Brands like Meta?

Meta’s research into neural biofeedback technologies like EMG could empower it to create a more seamless extended reality experience for everyone. The brand’s current research aims to create a pair of glasses and an EMG wristband that transforms digital interactions.

You’d use your natural movements rather than using a set of controllers to navigate through content and interact with virtual assets, like with the Meta Quest. Even thinking about what you wanted to do could send electrical impulses to your muscles that an EMG could pick up.

Meta’s EMG journey started in 2019 when it acquired CTRL Labs. Estimations predicted that the purchase cost the Menlo Park-based firm somewhere between $500 million and $1 billion.

At that time, it was among the few companies looking at EMG from an XR perspective. The other well-known innovator at the time was Thalmic Labs, now “North,” which has since been purchased by Google.

When Meta acquired CTRL Labs, the firm adopted the company’s critical IP in advanced EMG models. The CTRL Labs company had already developed custom virtual keyboards capable of adapting to a user’s unique typing patterns based on electrical activity from the muscles.

Moreover, Meta also bought the Haptic startup Lofelt in 2022. The purchase gives the firm the technology to create haptic feedback systems to support its EMG and HCI roadmaps.

The Meta Journey through User Interface R&D

Meta’s strategic acquisitions in the last few years have helped form the foundations of its strategy for introducing EMG and similar tech to the XR world. Through the “Reality Labs” division, Meta has made several strides toward creating a neural user interface.

In 2015, Facebook started work on an input device for AR glasses. It decided that a wrist-based wearable would be the most ergonomic way to collect data and began working on designs. Meta is working on several prototypes to study wristband haptics, including Bellowband and Tasbi.

Before acquiring EMG technologies through CTRL labs, Meta explored contextualized AI and other UI strategies without great success. After buying CTRL labs, Meta began looking for ways to use its EMG sensors effectively. Initial concepts included the introduction of a sensor for monitoring the “click” action (pinch and release), to represent the click of a mouse button.

In the future, Meta also plans on introducing more advanced controls. Users can touch and move virtual objects, like dragging and dropping on a mouse.

The EMG technology purchased and developed by Meta will also work alongside Meta’s AI innovations. For instance, if you wanted to exercise in a virtual world, the AI would surface a personalized list of playlists. The EMG technology would track your movements, and the AI would adapt your workout to your needs.

When Will Meta’s EMG Vision Surface?

It isn’t easy to know when we’ll begin to see evidence of Meta’s EMG experimentations in its technology. Despite constant innovation, the company’s “Reality Labs” subdivision has cost Meta a lot of money.

It even forced the company to engage in a significant internal reshuffle, reducing funding for new XR initiatives.

Since then, Meta has stopped and delayed various extended reality projects. The Meta XR smartwatch, Orion smart glasses, and Project Nazare have all been pushed to the sidelines.

Little information is available about Meta’s continued experimentations with EMG technology. From a broad perspective, EMG is a viable solution for UI development compared to more complex neurotech.

Plus, unlike direct brain-computer interfaces, EMG does not require the insertion of a chip or needle. Users can take EMG wristbands off whenever they like. Plus, the device can gradually learn from each user through prolonged use.

Also, EMG technology could be the perfect match for wrist-mounted wearables. Fitting computing resources into an EMG-enabled wristband or intelligent watch is relatively easy.

It also helps that CTRL Lab’s incredible innovations have placed Meta far ahead of the competition. As the Menlo Park-based evolves, technology like EMG may appear as another alternative for user experience functionalities.

The Road Ahead: What is EMG Going to Do Next?

It isn’t easy to know whether EMG technology will become a significant part of the next generation of XR headsets and smart glasses. Though Meta is taking some exciting strides in this area, there are still challenges to overcome.

To deploy EMG solutions for AR and XR, Meta and other companies will need to adapt their devices to suit various accessibility considerations. Privacy and security are other challenges, as EMG-enabled devices can read your most personal and private electric impulses.

To that end, FRL Research is currently a neuroethics program to identify and address these issues early on. If Meta is successful in its quest to transform XR interfaces with EMG, there could be some exciting developments ahead.

What could the next UI of the meta device accomplish if it can “read the minds” of its users?